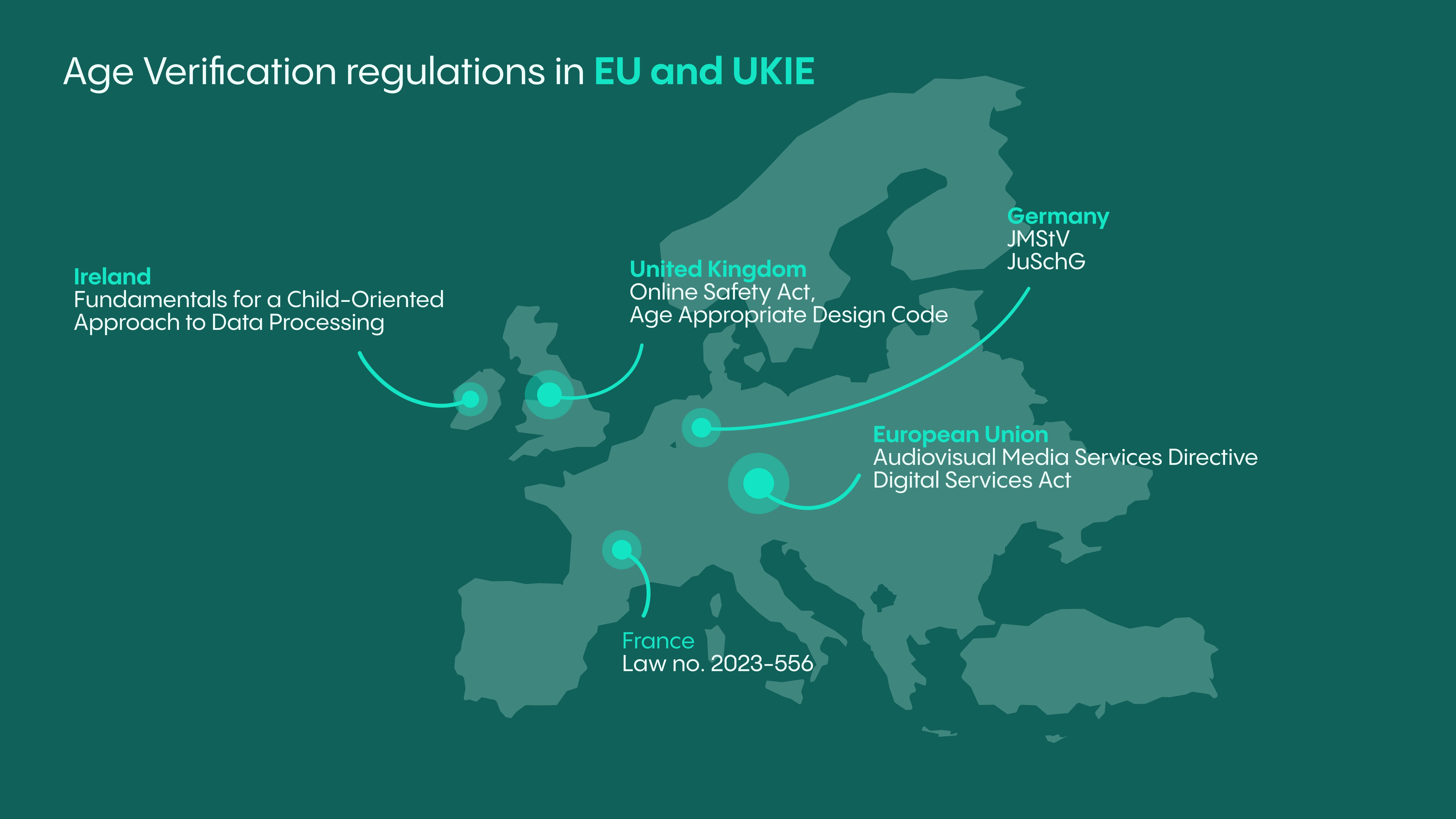

Age verification regulations in the EU and the UKIE

Several countries are introducing legislation to protect children from accessing harmful content online. At the EU & UKIE level, too, there are increasing efforts in this regard - governments have enacted new regulations to protect children from dangerous internet. Here are some of the latest and most impactful laws.

Dmytro Sashchuk

In an increasingly digital world, the protection of minors from harmful content is of paramount concern for governments worldwide. While the extent of the minor’s exposure to inappropriate content remains variable among different countries, the EU Kids Online 2020 reported an average of 33% of children aged 9-16 having seen sexual images. The same study discovered that around 10% of children aged 12-16 are consuming harmful content at least monthly. The data demonstrates that governments and regulators aiming to shield young individuals have very solid arguments for pursuing the laws requiring the implementation of age verification solutions to minimize harmful exposure of children to adult content.

As a result, age verification has become one of the hottest topics in the identity verification space today as jurisdictions globally are cracking down on the free accessibility of youth-detrimental content while limiting data collection from underage users. This is evident from countries' regulators' efforts to signal to businesses the urgency of implementing appropriate age verification systems, some examples include the UK, Germany, Spain, and France. From social media platforms to streaming services, online businesses across all sectors must comply with developing age verification requirements to meet their compliance obligations. This also helps to build trust among service users and helps to boost brand reputation.

Therefore, to avoid hefty fines or other liabilities, online businesses must seriously consider using age verification technologies that allow users to verify their age easily and conveniently and ensure that only adults can access adult content. Failure to follow age verification requirements can bring serious legal consequences and huge reputational damage to the brand. Non-compliant companies risk losing customers if they don’t comply with the laws and fail to protect children.

With a thorough grounding in the legislative framework, our legal experts are also perfectly placed to assist you in determining the EU’s and UK's most important, applicable, and upcoming age verification regulations. As we delve into the specifics of age verification laws in various countries, it becomes evident that while the overarching goal remains consistent - to safeguard minors - the approaches may vary. Let’s examine how jurisdictions tackle this critical issue within their legal frameworks.

1. European Union

Audiovisual Media Services Directive

Regulation overview:

The EU's Audiovisual Media Services Directive (AVMSD) governs EU-wide coordination of national legislation on all audiovisual media, both traditional TV broadcasts and on-demand services. The nature of this legal act is such that it only creates tools for Member States to make an effective and (somewhat) harmonized playing field for all EU audiovisual media service providers. In practical terms, AVMSD is the underlying reason many EU countries have implemented regulations requiring age restriction measures, as it is one of the most effective tools in preventing minors’ access to harmful materials. The latest review of AVMSD was carried out in 2018.

Applicability:

While the AVMSD does not create direct obligations on service providers on its own, it establishes the goals that each Member State must achieve by aligning their national law with objectives highlighted in the AVMSD. Practically, it means that the AVMSD has an indirect effect on relevant service providers, however directly applies to Member States requiring them to create measures for EU audiovisual media service providers.

Some notable requirements for each Member State include to:

- Ensure that a media service provider under their jurisdiction makes basic business information easily, directly, and permanently accessible to the end-users.

- Ensure that audiovisual media services provided in their jurisdiction do not contain any incitement to violence or hatred directed against a group of persons or a member of a group and/or public provocation to commit a terrorist offense.

- Ensure that audiovisual media services under their jurisdiction, which may impair the physical, mental, or moral development of minors, are only available in such a way as to ensure that minors will not normally hear or see them.

- Ensure that audiovisual media services under jurisdiction are made continuously more accessible to persons with disabilities through proportionate means.

Link:

Audiovisual Media Services Directive 2010/13/EU

Status:

- The original AVMSD was signed into law: on March 10, 2010

- The original AVMSD has applied from May 5, 2010

- The amended AVMSD has applied from Dec 18, 2018

Exemptions, if any:

Exemptions are generally determined by Member States.

Applicable sanctions:

Imposed on a national level.

Supervisory authority:

Each Member State must designate one or more national regulatory bodies. Additionally, on the EU level, the European Regulators Group for Audiovisual Media Services (ERGA) was created with the task, among others, of ensuring consistent implementation of the AVMSD.

Regulation (EU) 2022/2065 of the European Parliament and of the Council of 19 October 2022 on a Single Market For Digital Services and amending Directive 2000/31/EC (Digital Services Act or DSA)

Regulation overview:

The Digital Services Act, commonly referred to as DSA, is one of the latest impactful products of EU legislators in the realm of the prevention of illegal and harmful activities online. DSA, among others, regulates social media platforms, including networks (such as Facebook) or content-sharing platforms (such as TikTok), app stores, and marketplaces. The requirements under DSA can be broken down into tiers. While there is a “general” tier that applies to all regulated entities, other requirements apply depending on the nature of the service provided.

Applicability:

DSA applies to intermediary services providing information society services, such as a ‘mere conduit’ service, or a ‘caching’ service. or a ‘hosting’ service.

Namely, this includes the following:

- Internet access providers,

- hosting services such as cloud computing and web-hosting services,

- domain name registrars,

- online marketplaces,

- app stores,

- collaborative economy platforms,

- social networks,

- content-sharing platforms,

- online travel and accommodation platforms

As mentioned, the DSA’s effects are tiered depending on the nature of the service provided by the intermediary. The first line (i.e., 'general requirements) applies to all intermediary services listed above. Some notable general requirements include:

- All intermediary services must designate a single point of contact to communicate with EU authorities and recipients of the service.

- All intermediary services must make publicly available and easily accessible reports in machine-readable format on content moderation practices they employ.

The second line of requirements is unique to a specific service, depending on whether intermediary service refers to hosting or provision of an online platform or online marketplace:

For hosting services, notable requirements include:

- Implementing a ‘notice and action mechanism’ whereby users can flag illegal content for the service provider to remove.

- Providing a ‘statement of reasons’ to any user whose information is restricted by the service provider due to illegality.

For online platforms, notable requirements include:

- Prioritizing notifications of illegal content by ‘trusted flaggers’ and suspending service recipients who misuse the platform by providing illegal content or submitting manifestly unfounded complaints.

- Ensuring transparency with regard to any advertising on the platform or any recommender systems used by the platform.

- Putting in place measures to ensure a high level of privacy, safety, and security of service recipients who are minors.

For online marketplaces, notable requirements include:

- Obtaining and displaying to consumers details of the traders who use their services to market their goods/services.

- Designing their online interfaces in a way that enables traders to comply with their obligations regarding pre-contractual information, compliance, and product safety information under EU law.

- Informing consumers who have been offered an illegal product/service of the illegality and possible means of redress.

Finally, the DSA has separate requirements that apply to particularly large entities designated as Very Large Online Platforms or Very Large Online Search Engines. Their notable requirements include:

- Assessing, mitigating, and responding to risks emerging from the use of their services.

- Ensuring compliance with the DSA by implementing independent audits, providing requested data to regulators, and establishing a compliance officer.

- Paying a supervisory fee to the European Commission to cover their supervision costs.

Link:

Status:

Entered into force on November 16, 2024;

Started to apply on February 17, 2024 (except for a few articles designated to become applicable at later dates).

Applicable sanctions:

The precise amount of fines is determined by the national authority designated as supervisor under DSA and may differ from country to country. DSA, however, limits the maximum amount of fines that can be imposed for failure to comply with DSA requirements, namely:

- 6% of the worldwide annual turnover in the preceding financial year for failure to comply with an obligation under DSA;

- 1% of the annual income or the worldwide annual turnover in the preceding financial year for supplying false, incomplete, or incorrect information or failure to submit to inspection; and

- 5% of the average daily worldwide turnover or income per day as a periodic penalty.

Supervisory authority:

Each EU country has been tasked with designating the authority that would be responsible for the supervision and enforcement of the DSA. The most up-to-date information on the designation of national authorities can be found here.

On the other hand, VLOPs and VLOSEs are directly supervised by the EU Commission.

Germany

Interstate Treaty on the Protection of Human Dignity and the Protection of Minors in the Media

Regulation overview:

The German legal framework, while being one of the oldest, is arguably one of the most comprehensive when it comes to the protection of minors in media. Interstate Treaty on the Protection of Human Dignity and the Protection of Minors in the Media (JMStV) and Federal Act on Protection of Minors (JuSchG) form the backbone for protections that aim to safeguard children and adolescents by requiring media providers to impose age restrictions on content that may impair normal development of children. JMStV is notable as it marked the formation of the Commission for Protection of Minors in Media (known as KJM). It also provided the KJM’s with the power to establish a framework for accreditation of age verification solutions, such as Veriff.

Applicability:

The JMStV applies to media providers, whether broadcasters or telemedia service providers. To reflect the fast-paced technological development in this sphere and to incorporate the requirements of AVMSD, the scope of businesses that have to comply with JMStV was expanded to include video-sharing services in 2020.

Notable requirements of the JMStV include:

- To prevent or block any content deemed illegal under the German Criminal Code as specified in Articles 4(1) and (2) of JMStV.

- To ensure that content harmful to minors is made available only to the appropriate age category of users, while other age groups are obstructed from accessing it through suitable means.

- For video-sharing platforms where they supply content harmful to the development of minors, to introduce and apply age verification systems authorized by KJM and enable parental controls.

- To ensure that advertisements conform to the content restrictions likesame as the service itself.

- For providers of fee-based television services that involve harmful content to appoint a child protection officer.

- To ensure compliance with broadcast times restrictions where the content provided may negatively impact children.

Link:

Status:

In force since 2003

Exemptions, if any:

Not applicable, however, the JMStV permits certain derogations authorized by KJM or German federal state media authorities (see Article 9 of JMStV).

Applicable sanctions:

KJM can enforce the JMStV by preventing German users’ access to noncompliant services. Service providers who violate JMStV’s requirements may be imprisoned or fined up to EUR 300,000.

Supervisory authority:

KJM

Federal Act on Protection of Minors

Regulation overview:

The Federal Act on Protection of Minors (JuSchG), while broader than JMStV, also contains requirements relevant to businesses regarding the protection of minors. The main difference lies in the fact that JuSchG imposes precautionary requirements that are precautionary to ensure the safe digital experiences of minors, particularly in the digital environment.

Applicability:

The JuSchG is quite a bit broader than JMStV and contains requirements that may be relevant not only to media service providers but also to other businesses. For example, this law contains restrictions on minors’ attendance of restaurants and public dance events and alcohol consumption prohibitions; subsequently, these restrictions must be applied by whichever business organizes such activities. At the same time, the JuSchG contains provisions that are relevant specifically to media providers.

Notable requirements of the JuSchG include:

- For films and games to include a mandatory marking approved by state authorities or authorized self-regulation organizations and ensure that they are released only to permitted age groups.

- For media distributors who organize public film events (i.e. cinemas) to restrict event attendanceattendance of the event only to the permitted age groups.

- Imposing restrictions on media carriers that are labeled as “Not approved for youth”, namely prohibition to order them via mail order or to distribute these through means where customers may obtain them without any controls.

- Additionally, the JuSchG prohibits including media that the supervisory authority has determined to be harmful to minors inonto media carriers.

Link:

Status:

In force since 2003

Exemptions, if any:

It is not applicable; however, the JuSchG permits certain unique derogations depending on the restriction.

Applicable sanctions:

Service providers who violate JuSchG are at risk of receiving not only administrative fines that range between EUR 50,000 to EUR 5,000,000 but also subject to penal sanctions that include imprisonment

Supervisory authority:

France

Law no. 2023-556

Regulation overview: The law imposing a set of obligations on providers of online social networking services with the aim of safeguarding children from a negative effect of exposure to the internet and safeguarding the digital environment from cyber harassment.

Applicability: The law imposes the following requirement on providers of online social networking services (i.e. social media platforms) operating in France.

Some notable requirements include, to:

- to refuse the registration of minors under the age of 15 for their services, unless the authorization of a parent or guardian has been obtained;

- for the already existing accounts the same conditions apply - meaning that express authorization of one parent/guardian must be obtained as soon as possible;

- parent or guardian must be able to ask online social networking service to suspend the account of their child under the age of 15;

- online social networking service must verify the age of its underage users and holders of parental authority. For this purpose, they must use technical solutions that comply with a reference framework published by the Regulatory Authority for Audiovisual and Digital Communication (Arcom) upon consulting the National Commission on Informatics and Liberty (CNIL).

Link: Law no. 2023-556

Status:

- Law no. 2023-556 was signed into law on July 7, 2023.

- Law no. 2023-556 will generally come into force on the date to be further specified by a legislative decree.

Exemptions, if any: The obligations do not extend to non-profit online encyclopedias and/or to non-profit educational and/or scientific directories.

Applicable sanctions: Fines up to 1% of the company's annual global turnover.

United Kingdom

Online Safety Act

Regulation overview: The Online Safety Act is a set of regulations to protect children and adults from illegal and harmful content online. It makes providers of internet services and providers of search engines more responsible for their users' safety on their platforms.

Applicability: The Online Safety Act imposes legal requirements on:

- providers of internet services which allow users to encounter content generated, uploaded or shared by other users;

- providers of search engines which enable users to search multiple websites and databases; and

- providers of internet services which publish or display pornographic content (meaning pornographic content published by a provider).

As well as UK service providers, the Act applies to providers of regulated services based outside the UK where they fall within the scope of the Act.

Platforms will be required to:

- remove illegal content,

- prevent children from accessing age-inappropriate content,

- enforce age limits and age-checking measures,

- provide parents and children with clear and accessible ways to report problems online when they do arise.

On 5 December 2023, the OFCOM published guidance on age assurance measures that online services publishing pornographic content must implement to comply with the Online Safety Act. The Guidance provides significant value to service providers as one of the topics addressed concerns effective age verification systems that can be used by online services to comply with their obligations under the Act. For example, these include photo-identification matching systems that use government-issued identity documents, such as ID cards or driver licenses, or facial age estimation that estimates the age by analyzing facial features.

Link: Online Safety Act

Status: The Act received royal assent and became law on October 26, 2023.

Applicable sanctions: Fines of up to £18m or 10% of annual global turnover, whichever is greater. Criminal action against senior managers.

Supervisory authority:

The Office of Communications (OFCOM)

Age Appropriate Design Code

Regulation overview: The Children’s Code (or the Age Appropriate Design Code) contains 15 standards that online services need to follow in order to protect children's data in an online environment.

Applicability: The code applies to information services likely to be accessed by children (including apps, programs, search engines, social media platforms, online marketplaces, online games, content streaming services, and any websites offering other goods or services to users over the internet).

Some notable requirements include, to:

- Age-appropriate application: Take a risk-based approach to recognizing the age of individual users and ensure the effective application of standards stipulated in the Code to child users;

- Transparency: Provide concise, prominent, and clear language suited to the age of the child of privacy information and other published terms, policies and community standards;

- Default settings: Set the privacy setting to high by default, unless there is a compelling, demonstrable reason for a different setting;

- Data minimisation: Collect and retain only the minimum amount of personal information that company needs to provide elements of the service in which a child is actively and knowingly engaged.

Link: Age Appropriate Design Code

Status: This code came into force on September 2, 2020, with a 12 month transition period.

Exemptions, if any: The requirements do not apply to some services provided by public authorities; websites which just provide information about a real-world business or service; traditional voice telephony services; general broadcast services; and preventative or counseling services.

Applicable sanctions: Enforcement takes place under UK GDPR and the Privacy and Electronic Communications Regulations. The online services can be issued assessment notices, warnings, reprimands, enforcement notices and penalty notices. For serious breaches of the data protection principles, the GDPR fines (up to €20m or 4% of annual worldwide turnover), whichever is higher, can be issued.

Supervisory Authority:

Information Commissioner Office (ICO)

Ireland

Fundamentals for a Child-Oriented Approach to Data Processing

Regulation overview:

Fundamentals for a Child-Oriented Approach to Data Processing is a set of standards that online services must follow to protect children's data in an online environment. The standard outlines the 14 fundamental principles that must be followed.

Applicability:

The Data Protection Commission considers that organizations should comply with the standards and expectations established in these fundamentals when the services provided by the organization are directed at, intended for, or likely to be accessed by children.

Some notable requirements include, to:

- Floor of protection: Online service providers should provide a "floor" of protection for all users unless they take a risk-based approach to verifying the age of their users so that the protections afforded in this Standard are applied to all processing of children's data.

- Clear-cut consent: When a child has given consent for their data to be processed, that consent must be freely given, specific, informed, and unambiguous, made through a clear statement or affirmative action.

- Know your audience: Online service providers should take steps to identify their users and ensure that services directed at, intended for, or likely to be accessed by children have child-specific data protection measures in place.

- Minimum user ages aren't an excuse: Theoretical user age thresholds for accessing services do not displace the obligations of organizations to comply with the controller obligations under the GDPR and the standards and expectations set out in this Standard where "underage" users are concerned.

Link:

Fundamentals for a Child-Oriented Approach to Data Processing

Applicable sanctions:

- Not provided since this is not a standalone law.

- Non-compliance would likely be enforced under GDPR.

Supervisory authority:

Data Protection Commission

2. Actionable insights for your business

Familiarize with laws and regulations:

To pave the way for compliance, businesses should first familiarize themselves with the relevant laws and regulations in the regions where they operate. This includes understanding the scope, the specific age thresholds for different types of content, authorized age verification measures, and the penalties for non-compliance. Informed legal counsel can provide valuable guidance in this area.

Assess current practices

It is recommended businesses assess their current practices and identify potential gaps in age verification. This process ranges from reviewing website design and user registration processes to specific content access controls. It’s important to remember that age verification is not a one-size-fits-all solution; what works for one business in one jurisdiction may not work for another.

Apply age verification systems

Implementing robust age verification systems is a crucial step. These systems can be very different, with simple forms, such as self-declaration, becoming less and less attractive. Meanwhile, more sophisticated methods such as identity verification services have become more suitable for compliance purposes. Even though robust age verification solutions typically require more resources, they offer greater accuracy and reliability, and informed businesses should weigh the costs and benefits of different systems to determine the most suitable option.

Train internal staff

Training internal staff to handle age verification is equally important. Employees should understand the importance of age verification, know how to use the systems in place, and be able to handle any issues that arise, particularly those that are outlined within the applicable laws or regulations. Regular training sessions can help ensure that staff are up-to-date with the latest practices and regulations.

Stay up-to-date

Finally, businesses should always aim to stay up-to-date, which involves regular reviews and updates to their age verification practices. As laws and technologies constantly evolve, businesses need to stay ahead of these changes to remain compliant and efficient. Regular audits can come in handy and help identify any issues early and allow for timely rectification.

3. How Veriff can help?

Veriff operates globally, and our online age verification allows our customers to ensure a person’s capacity to access restricted content with little to no friction. Veriff has an arsenal of age verification solutions that can be used to verify that the person has reached the required age. For example, age validation utilizes government-issued identity documents, such as a government-issued ID card or driver's license, or age estimation that allows users to verify their age without requiring identity documents. This means that our customers can stay compliant with existing laws requiring the implementation of age verification systems and stay ahead of the curve.

Veriff’s Age Validation solution enables your business to seamlessly confirm whether or not users are above a minimum age threshold. Choose to grant access to age-appropriate users while underage users are automatically declined.

Veriff’s Age Estimation solution uses facial biometric analysis to estimate a user’s age without requiring the user to provide an identity document. This low-friction solution will enable you to convert more users faster and make it easy for businesses to verify any users who want to access age-gated products or services but who do not want to share their identity documents, possibly for privacy or ethical reasons.

Veriff's age verification solutions are uniquely positioned to support platforms like SuperAwesome in their efforts to create a safer online environment for children. By seamlessly integrating Veriff's age validation and estimation tools, SuperAwesome can ensure that only users above the required age threshold access their platforms, effectively enhancing safety measures. This collaboration enables SuperAwesome to uphold its commitment to safeguarding young audiences while providing developers with the necessary tools to comply with regulatory requirements and foster a secure online community.

Explore more

Get the latest from Veriff. Subscribe to our newsletter.

Veriff will only use the information you provide to share blog updates.

You can unsubscribe at any time. Read our privacy terms.